Ovetta Sampson's Call for More Human AI

Written by Erin Malone

Ovetta Sampson has been concerned with ethical AI in design for most of her user experience design career. Originally a reporter and journalist starting at the St. Joseph News-Press on the police beat working the night shift, Sampson spent several years as a reporter. In the early 2000s, she began writing content for the web and evolved from that first web job into a program manager role, still writing but also managing marketing campaigns and editing. Another magazine, more editing, and writing all kinds of marketing and web content eventually led to consulting and beginning to create UX content. As a consultant, Sampson did product management, content management, SEO (Search Engine Optimization), writing, editing, and all sorts of other content and editorial work for teams that didn’t have their own staff.

In 2014 she went back to school at DePaul University in Chicago and got her MS in Computer Science with a concentration in HCI. That shift in focus took her to IDEO as a design research lead and it was there that she began to design with data science and to bring human centered approaches into the development of Artificial Intelligence products. During her time at IDEO, Sampson worked on all kinds of emerging technologies with AI including autonomous vehicles, predictive services, and AR/VR products.

When she left IDEO, Sampson moved to Microsoft for a couple of years working with Microsoft’s largest enterprise customers on a variety of cross industry products tapping into data and AI. She then made the shift to Capital One to lead the efforts for creating human-centered AI and bringing a more ethical mindset into their machine learning and data practices. Most recently, she’s working at Google bringing human centered design to machine learning.

Caring deeply about the human part of human-centered design, Sampson speaks and writes often about how designers need to become part of the algorithm and AI design and development process. In 2018, she wrote a piece, first on LinkedIn and then published on Medium defining her AI and ethics manifesto. Her guiding principles still resonate and should be adopted by anyone working in AI.

These principles are essential to any interaction designer working on products whether AI is involved or not.

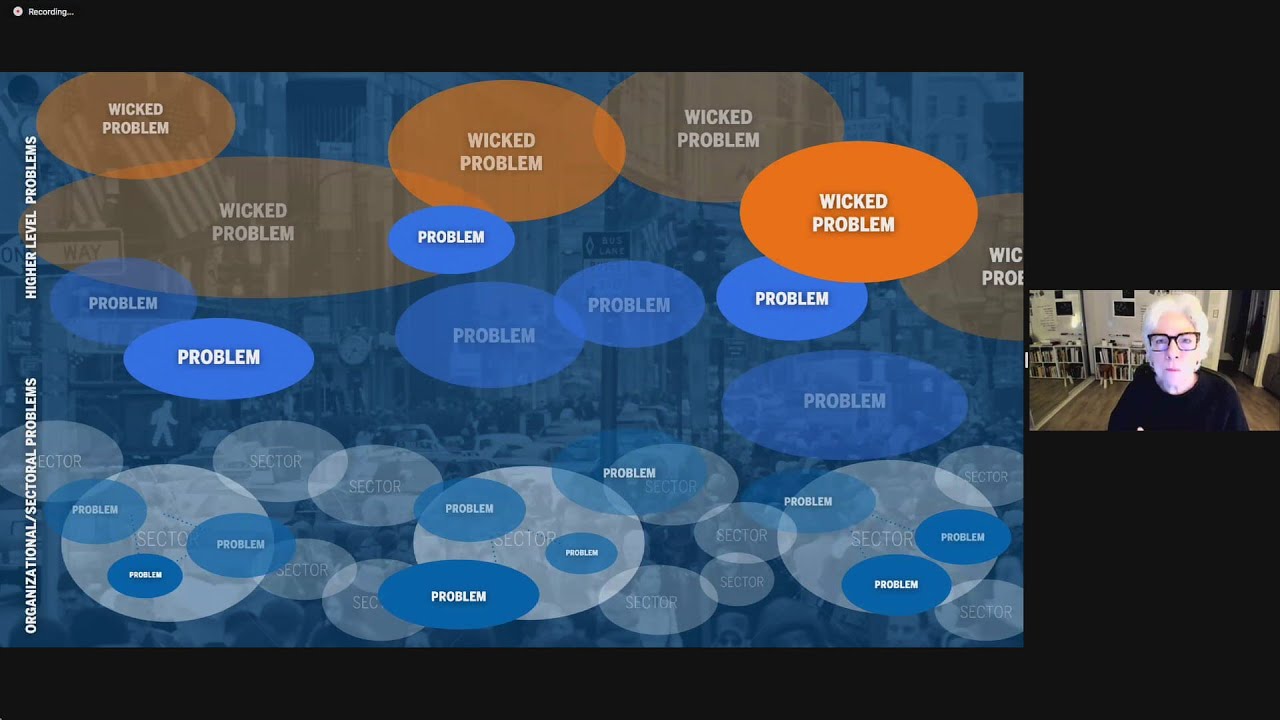

In 2021 Sampson writes in the journal Fieldsights, “it’s clear that using traditional user research methods, such as interviewing, to gain confidence in AI product design direction has limiting factors. These factors include the almost universal shallowness of AI literacy among product makers and consumers, the focus on a uniagency (single-agency) framework during interaction design research, and a lack of integration of design research and data science during the product research phase.”[2] And in a piece in Interactions she goes on to call for bringing the tools of design anthropology into the work to really understand people’s needs in their context of “culture, values, and human rituals.”[3]

Sampson cites the work of educator Dori Tunstall when describing how design anthropology can help humanize the work of creating AIs. Her ideas of humanity and data have been integrated into her design approach since the beginning. “When I was 9 years old, my dad bought a Commodore 64 and spent nights learning how to program it. My dad worked a lot so I didn’t get to spend a lot of time with him—but I would go down in the basement while he was using Fortran and COBOL. I was like, ‘I want to learn that!’ And he showed me how to program. It gave me this really amazing connection to the technology that has always been seared into me. It taught me from the beginning to not divide my humanity from tech. Data, numbers, and people were always intertwined. I learned later, in journalism school, that data was always part of evidence.”[4]

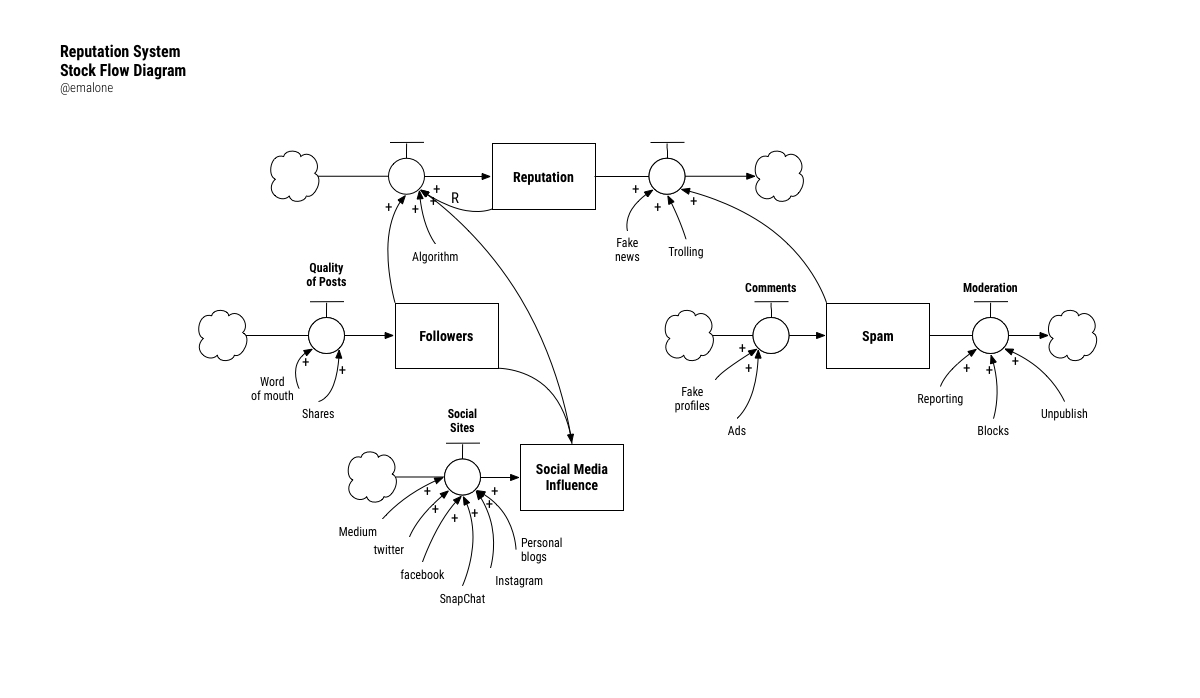

She goes on to talk about the data that companies collect and how separated the data is from the actual people it represents. This separation, she uses the word “divorced,” allows companies to take license with the information, often in unethical ways, because they have forgotten about the people behind the data.

“For us to create human-centered products in an intelligent era, we have to have people who understand human behavior and motivation working alongside people building algorithms. To do that, we must have UX researchers who understand how algorithms are built. We can no longer work in a silo.”[5]

For the past several years she has been teaching courses in machine learning and design at DePaul University and in 2023 she was named one of the top 100 people in enterprise artifical intelligence by Business Insider magazine.

Ovetta Sampson's

AI & Ethics Manifesto

Put Humans First

Is what I’m creating centered around a human need? Will it serve humans? Will it allow humans to do, be and become better? In short, is it human centered? If not, rethink the solution to make it so.

Protect Privacy

Will what I create violate someone’s privacy rights, if so, how are they notified, affected and in what ways? Can they use this product and maintain their individual privacy rights? If not, do they know what they’re sacrificing? Is what they’re gaining really as valuable as the fundamental right to privacy? Or is the give-get exchange lopsided.

Preserve Identity Choice

Will what I create strip away a person’s anonymity, if so, why, how, what are the ramifications of that, is this acceptable and if not are there ways to prevent this?

Create Safety

Will what I create harm others? If so, in what way and why? How easily can someone use what I create to harm others? Are there ways to prevent this? How do I create checks and balances to prevent abuse and misuse?

Deliver Equity

Can what I build be used to harm a protected class? And in what way? Is what I build excluding people? Why? Is my design equitable? Does it use privilege as a starting point and never deviate? Can my model be applied equally with no negative consequences for people of different races, ethnicities, backgrounds, and incomes?

Start with Good Intended Use

Does this tool have an intended non-harmful use? Even so, could it eventually do harm? To whom and in what ways? How do you guard against abuse? Do I have checkpoints and milestones to iterate and check on my model embedded in the design? How will my model be used five years from now? 10? How will I know?

Emphasize Transparency

Can others trace how I created this data-driven product? Is my process audible? Can anyone trace back to understand how my model was created. If not, why? Are all the people involved made aware of what I’ve created? If so, why not?”[1]

Footnotes

[1] Ovetta Sampson, “My Data and Design Ethics Manifesto,” Medium, October 11, 2018, https://medium.com/towards-data-science/my-data-and-design-ethics-manifesto-e9a2374345b7.

[2]. Ovetta Sampson, “A Case for Design Anthropology for Creating Human-Centered AI,” Society for Cultural Anthropology, August 9, 2021, https://culanth.org/fieldsights/a-case-for-design-anthropology-for-creating-human-centered-ai.

[3]. Ovetta Sampson, “A Lovely Day: An Optimistic Vision for an Automated Future,” Interactions 28, no. 1 (January 2021): 84–86, https://doi.org/10.1145/3439841.

[4]. Tony Ho Tran, “Marry Data Science with User Research. Ethical Design Depends on It.,” dscout.com, September 11, 2019, https://dscout.com/people-nerds/marry-data-science-with-user-research-ethical-design-depends-on-it.

[5]. Ibid

Bibliography

Julia. “Ovetta Sampson.” People Issue 2014, 2014. http://people2014.chicagoreader.com/ovetta-sampson/.

Sampson, Ovetta. “A Case for Design Anthropology for Creating Human-Centered AI.” Society for Cultural Anthropology, August 9, 2021. https://culanth.org/fieldsights/a-case-for-design-anthropology-for-creating-human-centered-ai.

———. “A Lovely Day: An Optimistic Vision for an Automated Future.” Interactions 28, no. 1 (January 2021): 84–86. https://doi.org/10.1145/3439841.

———. “Make the Impossible Possible | Ovetta Sampson | TEDxUChicago.” www.youtube.com, June 17, 2015. https://www.youtube.com/watch?v=P5ljcofA5q0&t=43s.

———. “My Data and Design Ethics Manifesto.” Medium, October 11, 2018. https://medium.com/towards-data-science/my-data-and-design-ethics-manifesto-e9a2374345b7.

Sampson, Ovetta . “About.” Ovetta Sampson, 2017. http://www.ovetta-sampson.com/about-ovetta.

Tran, Tony Ho. “Marry Data Science with User Research. Ethical Design Depends on It.” dscout.com, September 11, 2019. https://dscout.com/people-nerds/marry-data-science-with-user-research-ethical-design-depends-on-it.

Check out some of Ovetta's talks about AI on YouTube

Activism in AI with Google's Ovetta Sampson - Design Observer Design of Business interview

Generative AI and creative arms race - Ovetta Patrice Sampson (Config 2023)

Google's Ovetta Sampson, Design in and Automated Future, Google Next and vTeam

Navigating AI, Leadership & Biases with Ovetta Sampson

Selected Stories

Sasha Costanza-ChockProject type

Kaaren HansonProject type

Ari MelencianoProject type

Mizuko Itoresearch

Boxes and ArrowsProject type

Mithula NaikCivic

Lili ChengProject type

Ovetta SampsonProject type

Yehwan SongProject type

Anicia PetersProject type

Simona MaschiProject type

Jennifer BoveProject type

Chelsea JohnsonProject type

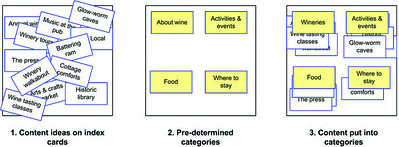

Donna SpencerProject type

Lisa WelchmanProject type

Sandra GonzālesProject type

Amelie LamontProject type

Mitzi OkouProject type

The Failings of the AIGAProject type

Jenny Preece, Yvonne Rogers, & Helen SharpProject type

Colleen BushellProject type

Aliza Sherman & WebgrrrlsProject type

Cathy PearlProject type

Karen HoltzblattProject type

Sabrina DorsainvilProject type

Lynda WeinmanProject type

Irina BlokProject type

Jane Fulton SuriProject type

Carolina Cruz-NeiraProject type

Lucy SuchmanProject type

Terry IrwinProject type

Donella MeadowsProject type

Maureen StoneProject type

Ray EamesProject type

Lillian GilbrethProject type

Mabel AddisProject type

Ángela Ruiz RoblesDesigner